Dockerize your MERN + Flask app

Run Next.js, Express.js, Flask, Mongo DB, and Redis containers on a single command

Hello developers,

In this short😂 article, we will try to understand why and how to use docker in your next project.

Why should I dockerize my project?

Suppose, we have a new developer joining our team, rather than wasting other developers' time in setting up the project, the new member can just run

docker-compose upand get all the services up and running🚀

There have many instances where code runs on a local machine but breaks on someone else's or in the production. It happens cuz of different versions of libraries, configs, and services like databases or cache. Docker installs all the services and runs them in an isolated environment as per the given instructions.

Docker also helps to build workflows and automation for testing and linting with CI/CD pipelines which makes it easier to deploy to production.

Note - This tutorial is not for absolute beginners but I'll still recommend reading it. If you don't have docker installed on your machine, please check it out on youtube.

App Description

Suppose your app has

Two servers running on -

- Express.js API -

http://localhost:8000 - Flask API -

http://localhost:5000

- Express.js API -

Two services running for Express server -

- Mongo Db -

mongodb://localhost:27017/db_name - Redis -

redis://localhost:6379

- Mongo Db -

React/Next.js frontend running -

http://localhost:3000

Glossary -

Containers vs Images - We can consider Images as classes of OOPs and Containers as instances of those images. Images are mostly huge files that are built based on the Dockerfile and containers are isolated environments that run instances of those images. Images are stored locally but can be pushed to the Docker registry registry.hub.docker.com to share with other members. With our example, we will build images for every server and service.

Docker compose - It's a daunting task to build and run images for every service for a bigger project. So, we use docker compose to consolidate all these docker images and make them build and run together.

Volumes - Volumes are storages used for persistence. If we don't use volumes in services like mongo DB and Redis, then all the data will be lost as soon as the container is stopped or removed and can't be accessed after running the container again. We also use volumes to map/mirror local code/files with the code/files inside the environment so that any changes made in local code gets mirrored and the server can be rerun with tools like nodemon

Dockerfile - Dockerfile has the set of instructions to build the image. It's kinda like GitHub actions.

.dockerignore - This file is like .gitignore file and has a list of excluded modules and files that you don't want in your isolated environment. ex. node_modules.

FROM - FROM instruction initializes a new build stage and sets the base Image (python for flask project and node for a node-based projects). A valid Dockerfile must start with a FROM instruction. It will pull the image from the public repo (Dockerhub) if not available locally. Always try to find a lighter version of the image (ex. alpine for node.js) to lower the overall size of the image of the app.

EXPOSE - EXPOSE is used to map the port of the host to the port of the container so that we can use the same port on the localhost as written in the code.

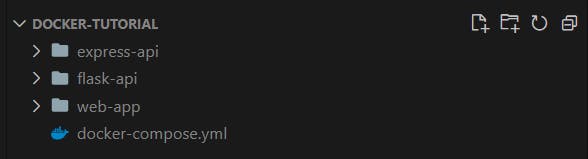

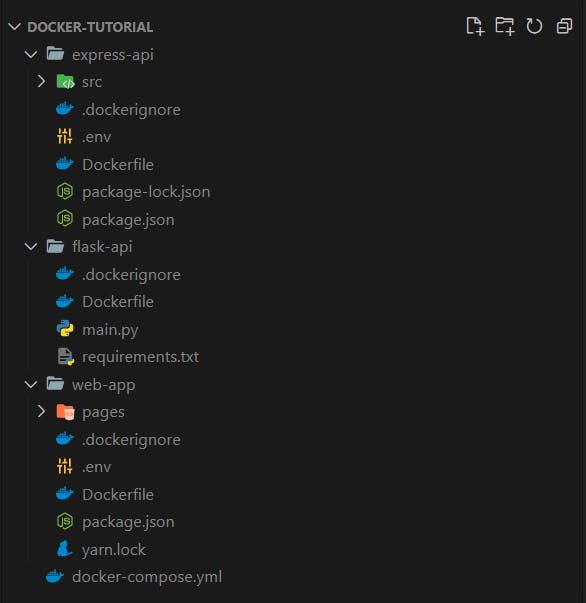

Folder structure of the project

Dockerfiles for Services

- Flask API -

- Running flask will need python.

- set working directory inside environment (directory

ai-modelswill be created by docker). - copy requirements.txt file from the host into the container.

- RUN the command to install dependencies mentioned in the requirements.txt.

- Now, COPY all the remaining files inside the container.

- Set required env variables inside the container.

- Enter the final command for running the server with CMD.

Dockerfile -

FROM python:3.7-slim-buster

WORKDIR /usr/src/ai-models

COPY requirements.txt .

RUN pip3 install -r requirements.txt

COPY . .

# To use flask run instead of python main.py

ENV FLASK_APP=main.py

CMD ["flask", "run", "--host", "0.0.0.0"]

.dockerignore - I have used virtual environment (skip this if you haven't)

/venv

Build and Spin up the container alone - If you want to a single server, you can build the image of that server and spin up a container for that image.

a. Move into the API directory

cd flask-api

b. Build an image - Next step is to build the image with a tag(i.e name of the image) and Dockerfile location ( '.' => current directory)

docker build -t app-flask-api .

c. Run the container - Map the ports(-p) and spin up the container in detached mode (-d) to get API working

docker run -dp 5000:5000 api-flask-api

- Express API -

- Running Express will need nodejs as a base image

- Use labels to describe the image (optional)

- set working directory inside environment

- copy package.json and package-lock.json files from the host into the container

- RUN a command to install dependencies mentioned in the package.json. If you use

npm ci, it's important to have package-lock.json file inside the environment. - Now, COPY all the remaining files inside the container.

- Set required env variables inside the container (if any or want to run it alone)

- Enter the final command for running the server with CMD

Dockerfile -

FROM node:alpine

LABEL version="1.0.0"

LABEL description="Community API server's image"

WORKDIR /usr/src/api

COPY package*.json .

# RUN yarn install --immutable

RUN npm ci

COPY . .

# CMD [ "yarn", "dev" ]

CMD [ "npm", "run", "dev" ]

.dockerignore - To avoid errors do not copy node_modules into your container.

node_modules

- React/Next.js frontend -

- React's image can be build by following the same steps as Express API.

Dockerfile -

FROM node:alpine

LABEL version="1.0.0"

LABEL description="Next.js frontend image"

WORKDIR /usr/src/web

COPY package*.json .

COPY yarn.lock .

# RUN npm ci

RUN yarn install --immutable

COPY . .

# CMD [ "npm", "run", "dev" ]

CMD [ "yarn", "dev" ]

.dockerignore - To avoid errors do not copy node_modules into your container.

node_modules

Docker compose - We will set instructions in

docker-compose.ymlfile needed to spin up all the services and API containers with a single command.- We will use version 3.8 of docker-compose file formatting

- Every image that is needed to spin up the container is a service a. Redis - cache_service (can be named anything) b. Mongo Database - db_service c. Flask API for AI models - api_models d. Express API - api_community e. Frontend web server - web

- For Redis and Mongo DB services, we will use prebuilt images from the public repository (Dockerhub). For other services, we will build the images based on the Dockerfiles that we have written.

- We will use named volumes for the persistence of the data (in Redis and Mongo DB services) and for mapping the files between host and container (in APIs and frontend). We need to create the volumes before using them inside any service.

restart = alwaysmakes sure that services will be restarted after every crash- Mention all of the env variables under

environment. - By default Compose sets up a single network for your app that is shared between different services but we can specify our own custom network(here, shared_network) that could be different for different services. When we run

docker-compose up, all the containers will join specified networks. - Hosts of the Redis and Mongo DB will not be localhost anymore but the corresponding service.

- Redis - redis://cache_service:6379

- Mongo db - mongodb://db_service:27017/db_name

- Map all the required ports, so that they can be accessible from the host

- Mention that express API

depends_oncache_service and db_service docker-compose.yml -

version: "3.8"

services:

cache_service:

container_name: cache_service

image: redis:6.2-alpine

restart: always

volumes:

- cache_service:/data/

ports:

- 6379:6379

networks:

- shared_network

db_service:

container_name: db_service

image: mongo

restart: always

volumes:

- db_service:/data/db

ports:

- 27017:27017

networks:

- shared_network

api_models:

container_name: api_models

build:

context: ./flask-api

dockerfile: Dockerfile

volumes:

- ./flask-api:/usr/src/ai-models

ports:

- 5000:5000

restart: always

networks:

- shared_network

api_community:

container_name: api_community

depends_on:

- cache_service

- db_service

build:

context: ./express-api # Path to the directory of Express server

dockerfile: Dockerfile # name of the Dockerfile

restart: always

volumes:

# Map local code to the code inside container and exclude node_modules

- ./express-api:/usr/src/api

- /usr/src/api/node_modules

ports:

- 8000:8000

environment:

- PORT=8000

- DB_URI=mongodb://db_service:27017/db_name

- REDIS_URL=redis://cache_service:6379

- ACCESS_TOKEN_SECRET=12jkbsjkfbasjfakb12j4b12jbk4

- REFRESH_TOKEN_SECRET=lajsbfqjb2l1b2l4b1lasasg121

networks:

- shared_network

web:

container_name: web

depends_on:

- api_community

build:

context: ./web-app

dockerfile: Dockerfile

restart: always

volumes:

- ./web-app:/usr/src/web

- /usr/src/web/node_modules

ports:

- 3000:3000

networks:

- shared_network

volumes:

db_service:

driver: local

cache_service:

driver: local

# [optional] If the network is not created, docker will create by itself

networks:

shared_network:

And we are done, I think

To run all the containers, go to the root directory where docker-compose.yml resides and -

docker-compose up

To stop the containers

docker-compose down

If you have made it till here, WOW

Follow for more cool articles

Thanks 😎